This article provides an in-depth analysis of the current challenges in post-silicon validation and how a standardized software framework can address each of them and create a scalable and adaptable testing environment in the dynamic landscape of semiconductor post-silicon validation engineering.

Semiconductor devices have become increasingly sophisticated to meet the demands of fast-paced industries. This requires more extensive and intricate testing processes. However, the tools used in post-silicon validation are typically fragmented across different teams and locations that develop and maintain software catering to their needs. While some of these software interfaces are built from scratch, others leverage a variety of commercial off-the-shelf (COTS) test sequencers. This disparity and non-uniformity in the software interfaces and toolchain limits data collection and correlation. As a result, engineering teams waste significant effort in developing similar functionality across different groups. With more IP being integrated into a single chip, previously distinct validation teams must now work with each other to get the product out into the market faster.

To address these growing complexities, it is essential to adopt a framework that can:

- Reuse software assets and code across teams, projects, and programs.

- Enable an intuitive debug environment for designers and validation engineers with increased automation.

- Easy onboarding of new engineers into the validation activity.

Despite the apparent simplicity of the primary objectives, challenges arise based on factors such as team size, organization structure, and other aspects like software practices and hardware platforms.

Solutions that a Standardized Framework can Offer

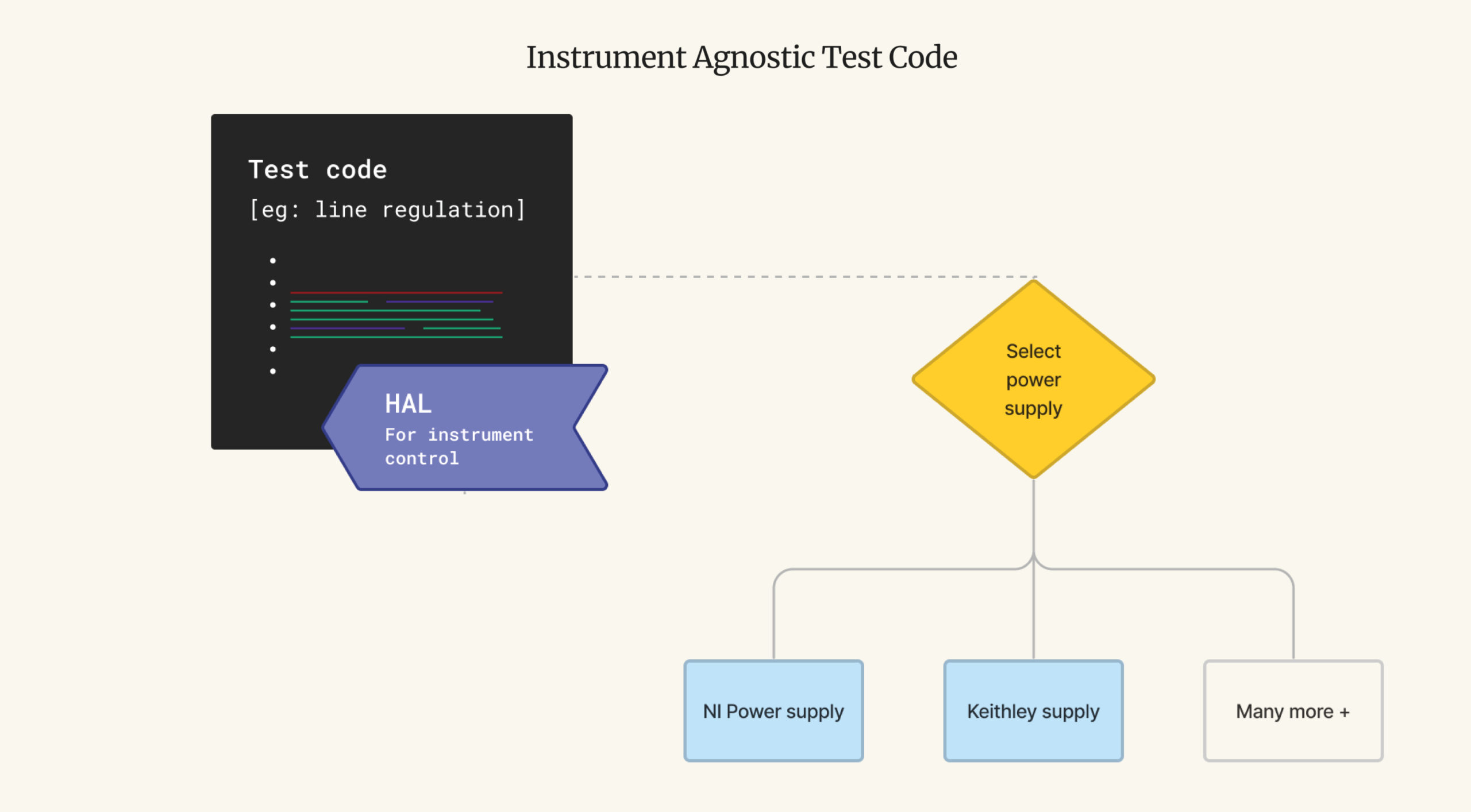

1. Reuse Test Code Despite Varying Instrument Setups

Post-silicon validation benches often introduce changes in instrument models across stations based on instrument availability, project requirements, and other considerations. This variability poses a challenge when sharing test code across projects. Validation engineers may need to constantly update or rewrite code to accommodate new instrument models or drivers, resulting in repetitive tasks throughout the organization.

While standardizing instrument models across validation stations is a potential solution, it proves expensive and limits an engineer’s flexibility in choosing the most suitable instruments to validate new products.

There is a powerful alternative—leveraging software principles to develop an instrument-agnostic test program. This is achieved through object-oriented programming, creating a “Hardware Abstraction Layer” (HAL) embedded in the test program to control instruments.

The HAL is a one-time development effort for the organization that is reusable across projects. Programming languages with native instrument driver support, such as LabVIEW, offer comprehensive support for both NI and third-party instruments, expedite HAL development, and ensure code quality. In cases where native drivers don’t align with the HAL API design, direct utilization of SCPI commands enables seamless integration.

For languages lacking off-the-shelf instrument driver support, referencing the instrument’s remote control programming manual allows engineers to employ SCPI commands for control and configuration.

In essence, adopting a test program written with HALs allows instrument model swaps within a project without significant code edits and makes code reuse across diverse projects possible. This streamlines development efforts and enhances adaptability to evolving design requirements and instrument configurations in post-silicon validation.

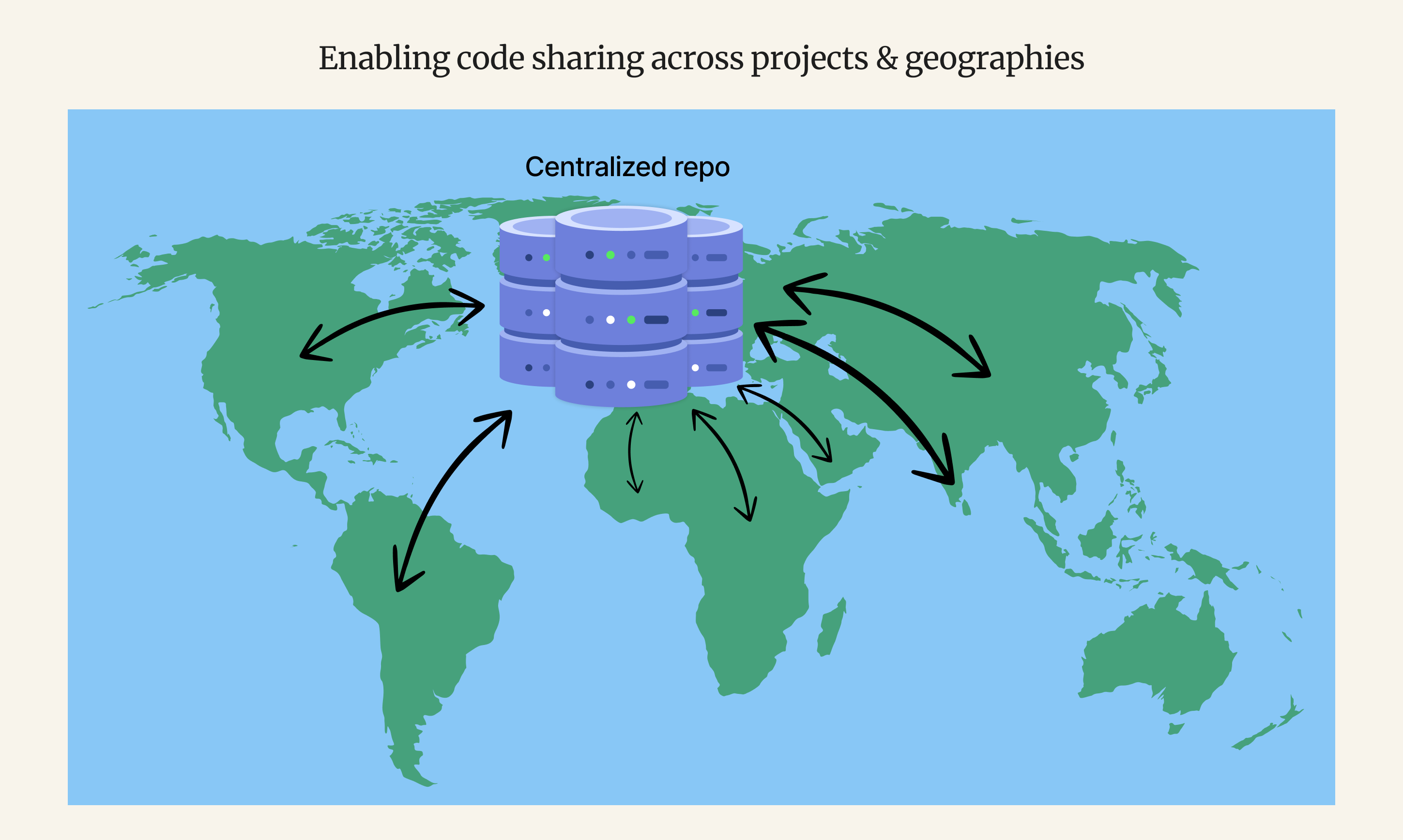

2. Address Inefficiencies in Code Sharing across Projects with a Centralized Repository

In test code development, managing dependencies proves to be a critical challenge. Often, test code relies on reusable components like Hardware Abstraction Layers (HALs), digital communication libraries, and other reusable libraries. Sharing this code without properly managed dependencies across projects becomes daunting, leading to broken code and additional efforts for engineers to make it usable.

Establishing centralized repositories is a transformative solution to address this difficulty. These repositories house well-documented, versioned reusables and test code accessible to all members of the validation community.

Versioning and dependency assignment are vital features of this centralized approach. Test code is linked to specific dependencies, ensuring that when a validation engineer accesses the code, they are informed of the required reusables. This process can be automated, enhancing workflow efficiency.

The repository accommodates the varied packaging and distribution techniques of different programming languages. For instance, LabVIEW utilizes VIP and NIPkg package formats, while C# relies on NuGet packages. This versatility ensures seamless integration regardless of the programming language used.

The centralized repository serves as more than a storage space. It becomes a portal for validation engineers to explore existing test code, mitigating the risk of redundant efforts and rework. Offering a comprehensive view of available resources not only simplifies access to standardized test code but also acts as a catalyst for optimizing time and effort.

Establishing centralized repositories represents a paradigm shift in test code management. It not only addresses the challenges of dependency management but also fosters collaboration, efficiency, and a culture of resource-sharing within the validation community.

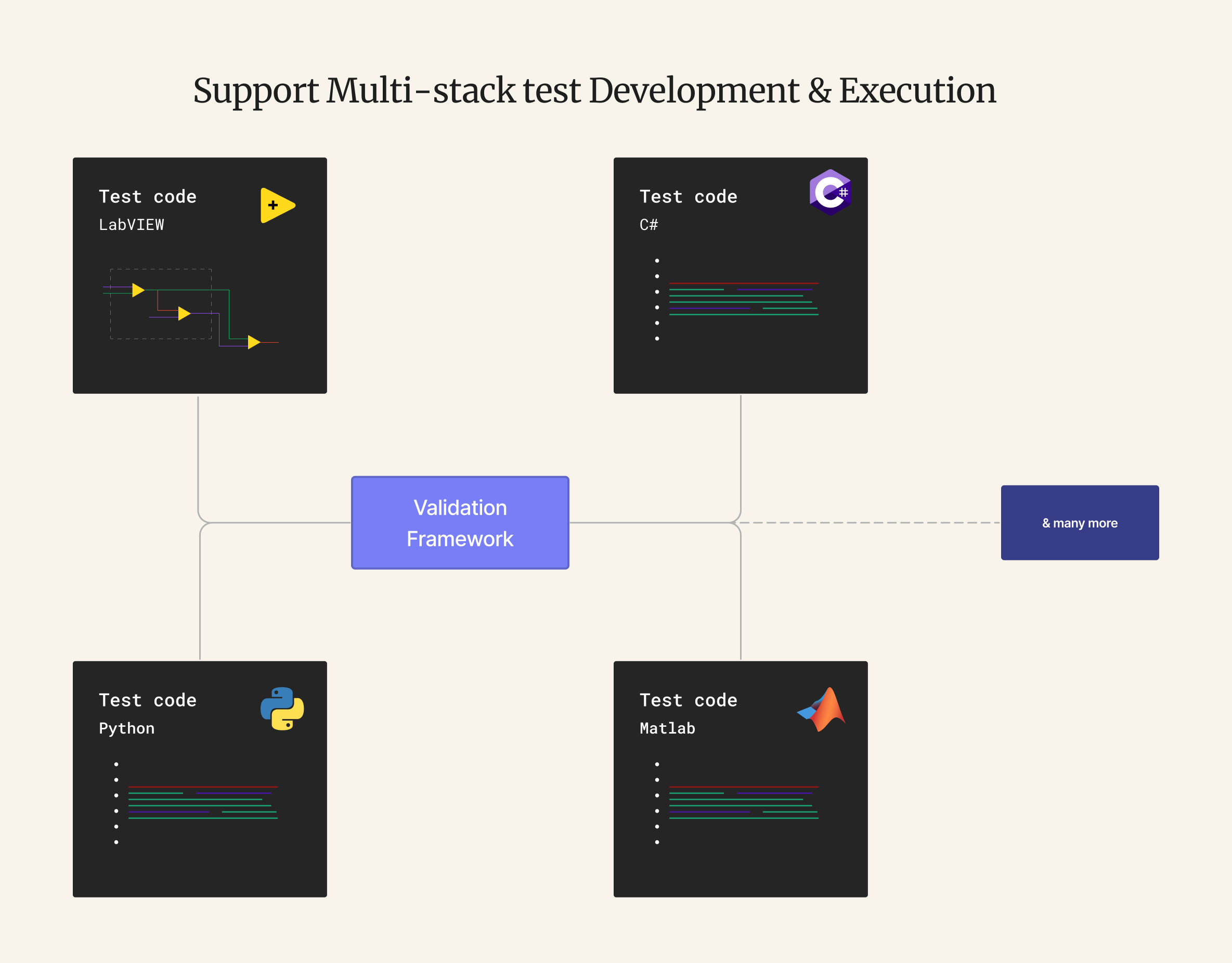

3. Consideration of Multi-stack Development

To standardize test code development practices across an organization through the implementation of a framework, considering supported programming languages is paramount. Several factors contribute to the significance of this decision:

- Diverse Engineer Skill Sets: Engineers within an organization often possess proficiency in different programming languages. Supporting various languages accommodates these diverse skill sets, allowing engineers to contribute effectively based on their expertise.

- Language Strengths: No single programming language excels in all aspects. Each language offers unique strengths., For example, Matlab provides rich toolboxes for applications like signal processing and control systems, Python boasts extensive library support for scientific computing and visualization, and LabVIEW excels in instrument control, data acquisition, real-time test and measurement support, graphical programming, etc.

- Equipment Manufacturer Support: Equipment manufacturers may provide driver support exclusively for a specific programming language. The chosen framework should align with these manufacturer specifications to ensure seamless integration.

The programming language selected for the framework should offer robust connectivity and support to integrate and execute test code written in other languages. The framework should also provide a mechanism to share instrument sessions and data between these programming languages. This facilitates a cohesive and collaborative development environment.

LabVIEW serves as an exemplary model for building a framework in this context. It can access reusables and tests written in diverse languages such as Python, .NET (C#, VB.NET), Matlab, and C/C++. This approach leverages the strengths of different languages while consolidating them on a common platform, exemplifying the adaptability and versatility required in modern validation frameworks.

The decision on programming language support is pivotal, shaping the framework’s ability to foster collaboration, enhance productivity, and accommodate the diverse skill sets and preferences inherent in a dynamic engineering environment.

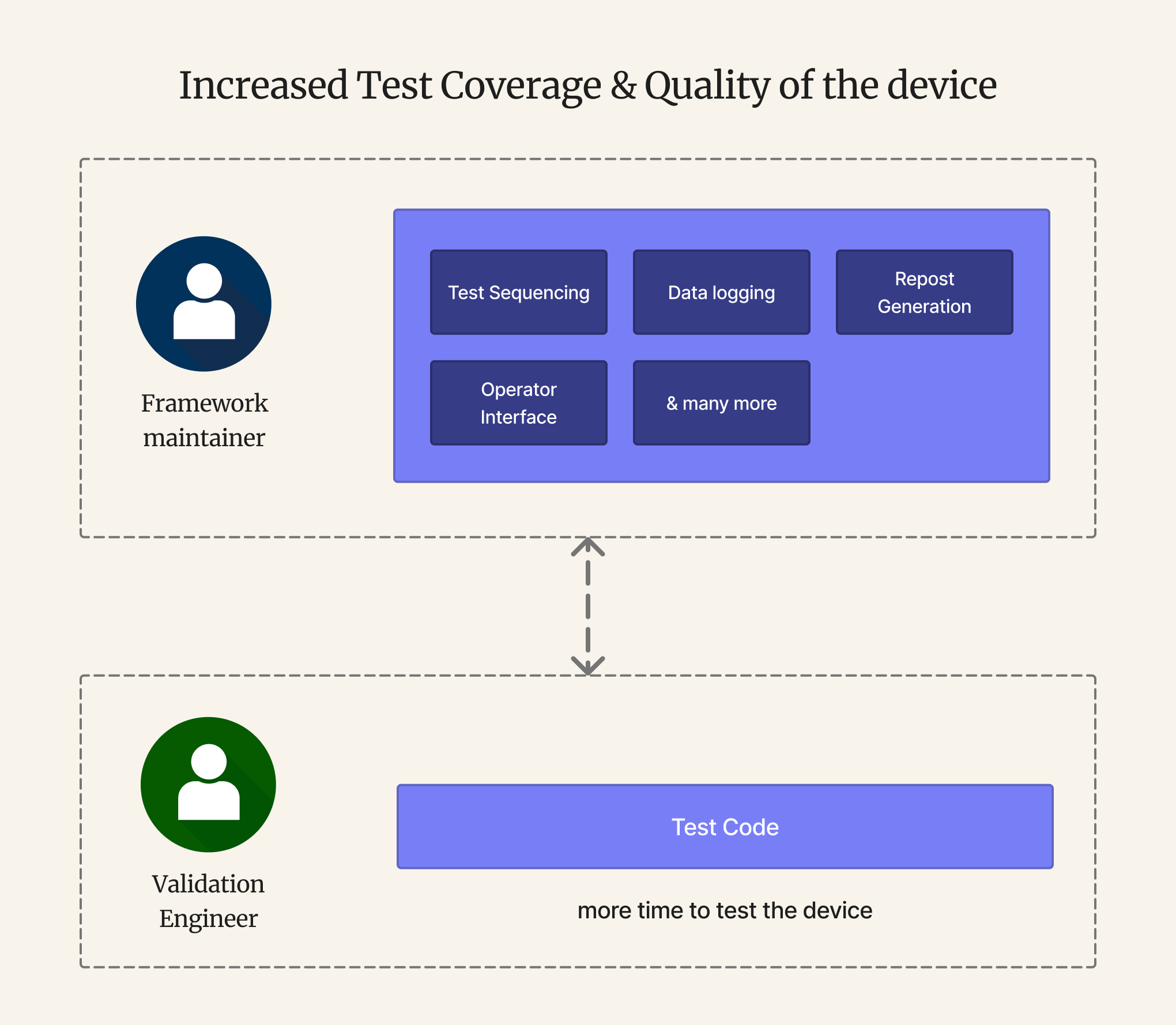

4. Save Time by Standardizing the Infrastructure for Testing and Automating the Test Execution

Validation engineers often struggle to construct supplementary infrastructure such as User interface, UUT tracking, result archival, report generation, and, notably, automating test execution to characterize the device for different conditions. These elements are indispensable for comprehensive testing across products.

Upon closer inspection, these software modules transcend specificity to any Device Under Test (DUT) or product. They embody product-independent components, termed Framework-specific components. Serving as foundational building blocks, these components are universally applicable, establishing a standardized framework for streamlined and efficient testing processes. Recognizing their product-agnostic nature underscores the strategic importance of developing and maintaining these components, optimizing overall test development efforts.

Organizations can construct these framework components from scratch or leverage built-in and community-reusable elements supported by programming languages such as LabVIEW, C#, and Python. These languages offer robust capabilities for data logging, report generation, and automated test sequencing. Additionally, infrastructure tools like TestStand contribute immense value by enabling test and measurement sequencing, with strong support for sequencing tests written in languages including LabVIEW, .Net, Python, C/C++, and others.

Recognizing and enabling framework components empowers validation engineers, giving them more time to concentrate on the actual tests to be performed on the product. The strategic emphasis on these foundational components not only streamlines current testing procedures but also lays the groundwork for scalable and adaptable testing practices in the dynamic landscape of semiconductor post-silicon validation engineering.

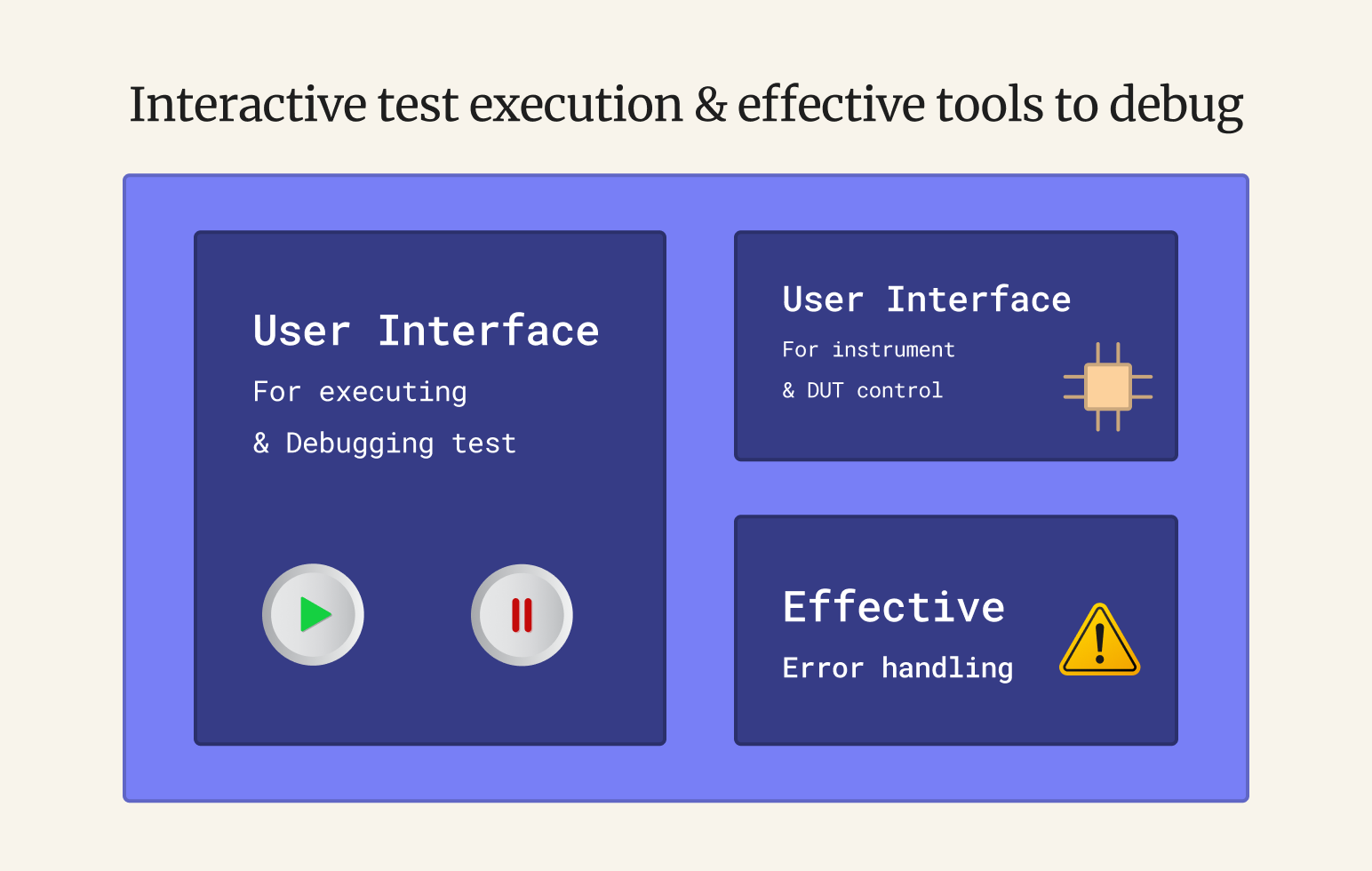

5. Enabling Interactive Execution and Efficient Debugging

An interactive debugging environment is indispensable for not only validation engineers but also design and application engineers, especially during critical phases like device wakeup and when unexpected results arise. This entails several crucial aspects:

- Interactive Control of Instruments and Device Registers: The environment should allow engineers to control instruments and device registers interactively. This can be achieved through an intuitive GUI or remote-control panels, providing real-time access for effective debugging.

- Flexible Execution of Measurements: Engineers should be able to execute measurements interactively through user interfaces, allowing them to step into the code for debugging purposes. This capability is crucial for pinpointing the root cause of errors and refining the code during the testing phase.

- Error Handling and Runtime Notifications: Robust error handling is paramount. The test code should capture runtime errors from instruments and other reusable components and notify users promptly. Moreover, the code should be capable of altering the execution flow based on certain errors, allowing for the execution of routines to shut down instruments or handle specific events safely.

For interactive execution and effective debugging, the chosen framework and programming language must empower engineers with these capabilities without imposing additional development overhead. Framework developers should prioritize providing seamless access to these features.

Languages like LabVIEW stand out in this regard, offering out-of-the-box user interface support that significantly enhances debugging and error-handling capabilities. Similarly, languages like C# provide a strong foundation, and frameworks can leverage these language features to augment debugging capabilities further.

Incorporating interactive features into the framework not only facilitates efficient debugging but also elevates the overall development experience for engineers working on semiconductor post-silicon validation.

Wrapping Up

In conclusion, an effective semiconductor validation framework addresses challenges by reducing time to market, enhancing efficiency through standardization and code reuse, increasing the quality of the device by expanding test coverage, unifying data analysis across the product lifecycle, delivering an advanced debug experience, and providing well-defined structures, documentation, and processes for quick recreation of automation software. Moreover, it supports easy onboarding, reducing the learning curve for engineers transitioning between different product lines.

Written by

Venkatesh Perumal Pranay Chandragiri and Naveenkumar Nachimuthu

December 1, 2023